I’ve been running Windows Home Server for just under a year now and thought I’d take a little time to explain my setup in detail and explain why I use this product when I could also simply build a Linux server to do many of the things handled by WHS.

My setup

Late last year, I bought an HP MediaSmart EX470 Windows Home Server for a project I was working on. Prior to buying the MediaSmart system, I had built a custom system with an evaluation copy of Windows Home Server provided by Microsoft, and gave it up in favor of the HP server. The HP MediaSmart systems ship with a paltry 512MB of RAM, but, with a little know-how, it’s not all that hard to upgrade to 2GB of RAM, which is almost a must. Frankly, HP will probably have to address the RAM issue at some point and give customers the option of easily expanding the RAM without voiding the warranty. The EX470 ships with a single 500GB hard drive. In order to enjoy the full benefit of Windows Home Server, you really need multiple hard drives. Since installing my server, I’ve added three more 500GB drives for a total of 2TB capacity. While that sounds like a ton of space, due to the way that WHS uses disk space, it’s actually less than it sounds like. This is not meant to be a negative point… just fact.

The MediaSmart server includes a gigabit Ethernet port and I’ve connected it, as well as my two primary workstations, to a gigabit Ethernet switch. I also use a wireless-N network at home to connect my wife’s Windows desktop computer and my MacBook to the network. I run VMware Fusion on my MacBook so I can run Windows programs.

How I use WHS

I save almost everything to my Windows Home Server. I write a lot, so all of my work is stored there, as is my iTunes library, backups of my DVDs and a lot more. All of the computers in my house are automatically backed up to my server, too. I have personally used WHS’ client restoration capability to restore a client computer and it’s an absolutely fantastic and surprisingly easy to use procedure.

Although WHS Power Pack 1 now includes the ability to backup the Windows Home Server to an external hard drive, a feature that was missing from the OEM release, I’ve opted to use Windows Home Server Gold Plan ($199/year, but right now, $99/year special) to automatically back up mu Windows Home Server to KeepVault’s servers. I’ve been using KeepVault for almost a year now and am very pleased. The only disadvantage to this method is that KeepVault won’t back up files that are larger than 5GB in size, but KeepVault provides unlimited storage space. The only files I have that are larger than 5GB in size are generally ISO files and virtual machine images and, if I so desired, I could take steps to protect even these files. However, for performance reasons, I don’t run my virtual machines from my server anyway, although I would give it a shot if WHS included a good way to handle iSCSI.

With the Power Pack 1 release, WHS is finally ready for prime time. Prior to this release, WHS suffered from a serious data corruption bug which, unfortunately, I feel victim to. The resulting damage was more of an annoyance as I had to work around it, but as I said, PP1 fixes this issue and adds some additional capability.

Windows Home Server includes very good remote access capability, too. When I’m on the road for business, I don’t have to try to remember exactly which files I need to take with me. If I forget something, I can just browse to my server and get the file. Configuring this capability is a breeze, too, as long as you have a router that supports uPnP, which I do. Otherwise, it would take manual router configuration, making WHS less than desirable for the average home user.

Could I have replicated this functionality with Linux, other open source products and some scripts? Sure. Would it have worked. Well, probably not as seamlessly. Even something like WHS is a tool for me and I’ve gotten to a point where I just need stuff to work so that I can focus on getting a job done. My WHS system protects my files at two levels-locally in the event of a client failure, and remotely in the event of a server failure-and gives me an easy way to get to my information if necessary.

Although the market need is still somewhat questionable, WHS is aimed at users that lack the technical expertise to build computers from scratch or that want to focus on the end result of the product-a working, stable server. For those that enjoy the thrill of building something from scratch, WHS is probably not for you. For me, however, it’s a perfect complement to my clients and perfectly fits my work style.

Tuesday, October 28, 2008

Help! My SQL Server Log File is too big!!!

Over the years, I have assisted so many different clients whose transactional log file has become “too large” that I thought it would be helpful to write about it. The issue can be a system crippling problem, but can be easily avoided. Today I’ll look at what causes your transaction logs to grow too large, and what you can do to curb the problem.

Note: For the purposes of today’s article, I will assume that you’re using SQL Server 2005 or later.

Every SQL Server database has at least two files; a data file and a transaction log file. The data file stores user and system data while the transaction log file stores all transactions and database modifications made by those transactions. As time passes, more and more database transactions occur and the transaction log needs to be maintained. If your database is in the Simple recovery mode, then the transaction log is truncated of inactive transaction after the Checkpoint process occurs. The Checkpoint process writes all modified data pages from memory to disk. When the Checkpoint is performed, the inactive portion of the transaction log is marked as reusable.

Transaction Log Backups

If your database recovery model is set to Full or Bulk-Logged, then it is absolutely VITAL that you make transaction log backups to go along with your full backups. SQL Server 2005 databases are set to the Full recovery model by default, so you may need to start creating log backups even if you haven’t ran into problems yet. The following query can be used to determine the recovery model of the databases on your SQL Server instance.

SELECT name, recovery_model_descFROM sys.databasesBefore going into the importance of Transactional Log Backups, I must criticize the importance of creating Full database backups. If you are not currently creating Full database backups and your database contains data that you cannot afford to lose, you absolutely need to start. Full backups are the starting point for any type of recovery process, and are critical to have in case you run into trouble. In fact, you cannot create transactional log backups without first having created a full backup at some point.

The Full or Bulk-logged Recovery Mode

With the Full or Bulk-Logged recovery mode, inactive transactions remain in the transaction log file until after a Checkpoint is processed and a transaction log backup is made. Note that a full backup does not remove inactive transactions from the transaction log. The transaction log backup performs a truncation of the inactive portion of the transaction log, allowing it to be reused for future transactions. This truncation does not shrink the file, it only allows the space in the file to be reused (more on file shrinking a bit later). It is these transaction log backups that keep your transaction log file from growing too large. An easy way to make consistent transaction log backups is to include them as part of your database maintenance plan.

If your database recovery model is set to FULL, and you’re not creating transaction log backups and never have, you may want to consider switching your recovery mode to Simple. The Simple recovery mode should take care of most of your transaction log growth problems because the log truncation occurs after the Checkpoint process. You’ll not be able to recover your database to a point in time using Simple, but if you weren’t creating transactional log backups to begin with, restoring to a point in time wouldn’t have been possible anyway. To switch your recovery model to Simple mode, issue the following statement in your database.

ALTER DATABASE YourDatabaseNameSET RECOVERY SIMPLENot performing transaction log backups is probably the main cause for your transaction log growing too large. However, there are other situations that prevent inactive transactions from being removed even if you’re creating regular log backups. The following query can be used to get an idea of what might be preventing your transaction log from being truncated.

SELECT name, log_reuse_wait_descFROM sys.databasesLong-Running Active TransactionsA long running transaction can prevent transaction log truncation. These types of transactions can range from transactions being blocked from completing to open transactions waiting for user input. In any case, the transaction ensures that the log remain active from the start of the transaction. The longer the transaction remains open, the larger the transaction log can grow. To see the longest running transaction on your SQL Server instance, run the following statement.

DBCC OPENTRANIf there are open transactions, DBCC OPENTRAN will provide a session_id (SPID) of the connection that has the transaction open. You can pass this session_id to sp_who2 to determine which user has the connection open.

EXECUTE sp_who2 spidAlternatively, you can run the following query to determine the user.

SELECT * FROM sys.dm_exec_sessionsWHERE session_id = spid --from DBCC OPENTRANYou can determine the SQL statement being executed inside the transactions a couple of different ways. First, you can use the DBCC INPUTBUFFER() statement to return the first part of the SQL statement

DBCC INPUTBUFFER(spid) --from DBCC OPENTRANAlternatively, you can use a dynamic management view included in SQL Server 2005 to return the SQL statement:

SELECT

r.session_id,

r.blocking_session_id,

s.program_name,

s.host_name,

t.text

FROM

sys.dm_exec_requests r

INNER JOIN sys.dm_exec_sessions s ON r.session_id = s.session_id

CROSS APPLY sys.dm_exec_sql_text(r.sql_handle) t

WHERE

s.is_user_process = 1 AND

r.session_id = SPID --FROM DBCC OPENTRANBackupsLog truncation cannot occur during a backup or restore operation. In SQL Server 2005 and later, you can create a transaction log backup while a full or differential backup is occurring, but the log backup will not truncate the log due to the fact that the entire transaction log needs to remain available to the backup operation. If a database backup is keeping your log from being truncated you might consider cancelling the backup to relieve the immediate problem.

Transactional ReplicationWith transactional replication, the inactive portion of the transaction log is not truncated until transactions have been replicated to the distributor. This may be due to the fact that the distributor is overloaded and having problems accepting these transactions or maybe because the Log Reader agent should be ran more often. IF DBCC OPENTRAN indicates that your oldest active transaction is a replicated one and it has been open for a significant amount of time, this may be your problem.

Database MirroringDatabase mirroring is somewhat similar to transactional replication in that it requires that the transactions remain in the log until the record has been written to disk on the mirror server. If the mirror server instance falls behind the principal server instance, the amount of active log space will grow. In this case, you may need to stop database mirroring, take a log backup that truncates the log, apply that log backup to the mirror database and restart mirroring.

Disk SpaceIt is possible that you’re just running out of disk space and it is causing your transaction log to error. You might be able to free disk space on the disk drive that contains the transaction log file for the database by deleting or moving other files. The freed disk space will allow for the log file to enlarge. If you cannot free enough disk space on the drive that currently contains the log file then you may need to move the file to a drive with enough space to handle the log. If your log file is not set to grow automatically, you’ll want to consider changing that or adding additional space to the file. Another option is to create a new log file for the database on a different disk that has enough space by using the ALTER DATABASE YourDatabaseName ADD LOG FILE syntax.

Shrinking the FileOnce you have identified your problem and have been able to truncate your log file, you may need to shrink the file back to a manageable size. You should avoid shrinking your files on a consistent basis as it can lead to fragmentation issues. However, if you’ve performed a log truncation and need your log file to be smaller, you’re going to need to shrink your log file. You can do it through management studio by right clicking the database, selecting All Tasks, Shrink, then choose Database or Files. If I am using the Management Studio interface, I generally select Files and shrink only the log file.

This can also be done using TSQL. The following query will find the name of my log file. I’ll need this to pass to the DBCC SHRINKFILE command.

SELECT nameFROM sys.database_filesWHERE type_desc = 'LOG'Once I have my log file name, I can use the DBCC command to shrink the file. In the following command I try to shrink my log file down to 1GB.

DBCC SHRINKFILE ('SalesHistory_Log', 1000)Also, make sure that your databases are NOT set to auto-shrink. Databases that are shrank at continuous intervals can encounter real performance problems.

TRUNCATE_ONLY and NOLOGIf you’re a DBA and have ran into one of the problems listed in this article before, you might be asking yourself why I haven’t mentioned just using TRUNCATE_ONLY to truncate the log directly without creating the log backup. The reason is that in almost all circumstances you should avoid doing it. Doing so breaks the transaction log chain, which makes recovering to a point in time impossible because you have lost transactions that have occurred not only since the last transaction log backup but will not able to recovery any future transactions that occur until a differential or full database backup has been created. This method is so discouraged that Microsoft is not including it in SQL Server 2008 and future versions of the product. I’ll include the syntax here to be thorough, but you should try to avoid using it at all costs.

BACKUP LOG SalesHistoryWITH TRUNCATE_ONLYIt is just as easy to perform the following BACKUP LOG statement to actually create the log backup to disk.

BACKUP LOG SalesHistoryTO DISK = 'C:/SalesHistoryLog.bak'Moving forward

Today I took a look at several different things that can cause your transaction log file to become too large and some ideas as to how to overcome your problems. These solutions range from correcting your code so that transactions do not remain open so long, to creating more frequent log backups. In additional to these solutions, you should also consider adding notifications to your system to let you know when your database files are reaching a certain threshold. The more proactive you are in terms of alerts for these types of events, the better chance you’ll have to correct the issue before it turns into a real problem.

Note: For the purposes of today’s article, I will assume that you’re using SQL Server 2005 or later.

Every SQL Server database has at least two files; a data file and a transaction log file. The data file stores user and system data while the transaction log file stores all transactions and database modifications made by those transactions. As time passes, more and more database transactions occur and the transaction log needs to be maintained. If your database is in the Simple recovery mode, then the transaction log is truncated of inactive transaction after the Checkpoint process occurs. The Checkpoint process writes all modified data pages from memory to disk. When the Checkpoint is performed, the inactive portion of the transaction log is marked as reusable.

Transaction Log Backups

If your database recovery model is set to Full or Bulk-Logged, then it is absolutely VITAL that you make transaction log backups to go along with your full backups. SQL Server 2005 databases are set to the Full recovery model by default, so you may need to start creating log backups even if you haven’t ran into problems yet. The following query can be used to determine the recovery model of the databases on your SQL Server instance.

SELECT name, recovery_model_descFROM sys.databasesBefore going into the importance of Transactional Log Backups, I must criticize the importance of creating Full database backups. If you are not currently creating Full database backups and your database contains data that you cannot afford to lose, you absolutely need to start. Full backups are the starting point for any type of recovery process, and are critical to have in case you run into trouble. In fact, you cannot create transactional log backups without first having created a full backup at some point.

The Full or Bulk-logged Recovery Mode

With the Full or Bulk-Logged recovery mode, inactive transactions remain in the transaction log file until after a Checkpoint is processed and a transaction log backup is made. Note that a full backup does not remove inactive transactions from the transaction log. The transaction log backup performs a truncation of the inactive portion of the transaction log, allowing it to be reused for future transactions. This truncation does not shrink the file, it only allows the space in the file to be reused (more on file shrinking a bit later). It is these transaction log backups that keep your transaction log file from growing too large. An easy way to make consistent transaction log backups is to include them as part of your database maintenance plan.

If your database recovery model is set to FULL, and you’re not creating transaction log backups and never have, you may want to consider switching your recovery mode to Simple. The Simple recovery mode should take care of most of your transaction log growth problems because the log truncation occurs after the Checkpoint process. You’ll not be able to recover your database to a point in time using Simple, but if you weren’t creating transactional log backups to begin with, restoring to a point in time wouldn’t have been possible anyway. To switch your recovery model to Simple mode, issue the following statement in your database.

ALTER DATABASE YourDatabaseNameSET RECOVERY SIMPLENot performing transaction log backups is probably the main cause for your transaction log growing too large. However, there are other situations that prevent inactive transactions from being removed even if you’re creating regular log backups. The following query can be used to get an idea of what might be preventing your transaction log from being truncated.

SELECT name, log_reuse_wait_descFROM sys.databasesLong-Running Active TransactionsA long running transaction can prevent transaction log truncation. These types of transactions can range from transactions being blocked from completing to open transactions waiting for user input. In any case, the transaction ensures that the log remain active from the start of the transaction. The longer the transaction remains open, the larger the transaction log can grow. To see the longest running transaction on your SQL Server instance, run the following statement.

DBCC OPENTRANIf there are open transactions, DBCC OPENTRAN will provide a session_id (SPID) of the connection that has the transaction open. You can pass this session_id to sp_who2 to determine which user has the connection open.

EXECUTE sp_who2 spidAlternatively, you can run the following query to determine the user.

SELECT * FROM sys.dm_exec_sessionsWHERE session_id = spid --from DBCC OPENTRANYou can determine the SQL statement being executed inside the transactions a couple of different ways. First, you can use the DBCC INPUTBUFFER() statement to return the first part of the SQL statement

DBCC INPUTBUFFER(spid) --from DBCC OPENTRANAlternatively, you can use a dynamic management view included in SQL Server 2005 to return the SQL statement:

SELECT

r.session_id,

r.blocking_session_id,

s.program_name,

s.host_name,

t.text

FROM

sys.dm_exec_requests r

INNER JOIN sys.dm_exec_sessions s ON r.session_id = s.session_id

CROSS APPLY sys.dm_exec_sql_text(r.sql_handle) t

WHERE

s.is_user_process = 1 AND

r.session_id = SPID --FROM DBCC OPENTRANBackupsLog truncation cannot occur during a backup or restore operation. In SQL Server 2005 and later, you can create a transaction log backup while a full or differential backup is occurring, but the log backup will not truncate the log due to the fact that the entire transaction log needs to remain available to the backup operation. If a database backup is keeping your log from being truncated you might consider cancelling the backup to relieve the immediate problem.

Transactional ReplicationWith transactional replication, the inactive portion of the transaction log is not truncated until transactions have been replicated to the distributor. This may be due to the fact that the distributor is overloaded and having problems accepting these transactions or maybe because the Log Reader agent should be ran more often. IF DBCC OPENTRAN indicates that your oldest active transaction is a replicated one and it has been open for a significant amount of time, this may be your problem.

Database MirroringDatabase mirroring is somewhat similar to transactional replication in that it requires that the transactions remain in the log until the record has been written to disk on the mirror server. If the mirror server instance falls behind the principal server instance, the amount of active log space will grow. In this case, you may need to stop database mirroring, take a log backup that truncates the log, apply that log backup to the mirror database and restart mirroring.

Disk SpaceIt is possible that you’re just running out of disk space and it is causing your transaction log to error. You might be able to free disk space on the disk drive that contains the transaction log file for the database by deleting or moving other files. The freed disk space will allow for the log file to enlarge. If you cannot free enough disk space on the drive that currently contains the log file then you may need to move the file to a drive with enough space to handle the log. If your log file is not set to grow automatically, you’ll want to consider changing that or adding additional space to the file. Another option is to create a new log file for the database on a different disk that has enough space by using the ALTER DATABASE YourDatabaseName ADD LOG FILE syntax.

Shrinking the FileOnce you have identified your problem and have been able to truncate your log file, you may need to shrink the file back to a manageable size. You should avoid shrinking your files on a consistent basis as it can lead to fragmentation issues. However, if you’ve performed a log truncation and need your log file to be smaller, you’re going to need to shrink your log file. You can do it through management studio by right clicking the database, selecting All Tasks, Shrink, then choose Database or Files. If I am using the Management Studio interface, I generally select Files and shrink only the log file.

This can also be done using TSQL. The following query will find the name of my log file. I’ll need this to pass to the DBCC SHRINKFILE command.

SELECT nameFROM sys.database_filesWHERE type_desc = 'LOG'Once I have my log file name, I can use the DBCC command to shrink the file. In the following command I try to shrink my log file down to 1GB.

DBCC SHRINKFILE ('SalesHistory_Log', 1000)Also, make sure that your databases are NOT set to auto-shrink. Databases that are shrank at continuous intervals can encounter real performance problems.

TRUNCATE_ONLY and NOLOGIf you’re a DBA and have ran into one of the problems listed in this article before, you might be asking yourself why I haven’t mentioned just using TRUNCATE_ONLY to truncate the log directly without creating the log backup. The reason is that in almost all circumstances you should avoid doing it. Doing so breaks the transaction log chain, which makes recovering to a point in time impossible because you have lost transactions that have occurred not only since the last transaction log backup but will not able to recovery any future transactions that occur until a differential or full database backup has been created. This method is so discouraged that Microsoft is not including it in SQL Server 2008 and future versions of the product. I’ll include the syntax here to be thorough, but you should try to avoid using it at all costs.

BACKUP LOG SalesHistoryWITH TRUNCATE_ONLYIt is just as easy to perform the following BACKUP LOG statement to actually create the log backup to disk.

BACKUP LOG SalesHistoryTO DISK = 'C:/SalesHistoryLog.bak'Moving forward

Today I took a look at several different things that can cause your transaction log file to become too large and some ideas as to how to overcome your problems. These solutions range from correcting your code so that transactions do not remain open so long, to creating more frequent log backups. In additional to these solutions, you should also consider adding notifications to your system to let you know when your database files are reaching a certain threshold. The more proactive you are in terms of alerts for these types of events, the better chance you’ll have to correct the issue before it turns into a real problem.

The top four mistakes organizations make when building datacenters

I had the opportunity to speak with Etienne Guerou, who is the Vice President, a company - a world leader in power solutions. Over a cup of coffee, Mr. Guerou, who has 20 years of experience in designing and building datacenters, briefed me on some of the top mistakes that IT professionals and decision-makers make when building their own datacenters.

Here are the main mistakes he outlined:

1. Harboring the wrong appreciation of a datacenter

One typical mistake is that would be that IT professionals and decision makers don’t differentiate between datacenters. Instead, they treat a datacenter as an all-inclusive black box where “many” servers are to be housed. That mindset is typically exposed when confronted with the simple question: “What do you intend to use your datacenter for?”

Ask yourself about the scale and anticipated usage of the datacenter, expansion plans of at least two to three years down the road, whether blade servers or standard rack mount servers will be utilized, etc. When you answer these questions, you can then extrapolate power consumption, as well as current and future capacities in terms of cabling, cooling and power.

2. Attempting to run a datacenter from improper facilities

It would be a mistake to simply acquire an ad-hoc facility and have it rebadged as a datacenter without a proper appraisal of its suitability, cautions Guerou. He cites an example in which a client, after having signed the lease for a fairly large space, sought out Mr. Guerou’s advice on how to proceed. To the client’s horror, the answer is that the venue was simply not suitable for a datacenter due to granite floorings and thick beams across the ceiling - resulting in an effective height that is simply inadequate for cabling and cooling purposes.

While it might not be possible for most organizations to put up custom-built datacenters on whim, what this client should have done was get an experienced consultant in and involved right from the get-go.

Ideally, in Mr. Guerou’s own words: “A datacenter should be a technical building dedicated to a very particular business of processing data.”

3. Buying by brands

Another common mistake is that many IT professionals attempt to buy into selected brands. While this strategy might work well when it comes to standardizing on servers or networking gear, an efficient and well-run datacenter has nothing to do with specific hardware brands or models. Rather, you should approach the datacenter from the perspective of a complete solution, where the entire design has to be considered as an integrated whole.

As an unfortunate side-effect of strong marketing by enterprise vendors, many users have, consciously or subconsciously, bought into the idea of designing a datacenter by snapping together disparate pieces of hardware. While not wrong, it’s imperative that the end-result be evaluated as a whole - and not in a piecemeal fashion.

Various hardware, such as types of servers, positioning of racks, networking equipment and redundant power supplies should dovetail properly with infrastructure such as cooling, ventilation, wiring, fire-suppression systems, and security measures.

4. Rushing onto the “Green IT” bandwagon

The increasingly popularity of “Green IT” has vendors unveiling new servers and equipment touted for their superior power efficiency. While the idea is definitely laudable, you should sieve out the marketing hype from actual operational consumption.

For example, two UPS from “vendor X,” while individually more power efficient at 90% loading, might actually offer a much poorer showing if deployed in a redundant configuration, where they will end up running at 45% loading. In the absence of proper scrutiny, green IT initiatives could degenerate into a numbers game.

At the end of the day, you should take overall power efficiency - or power factor, of the entire datacenter -a benchmark rather than weigh it by individual vendor claims. After all, a yardstick of a well-run datacenter has always been about power efficiency.

In parting, Mr. Guerou has the following advice for organizations thinking of building their own datacenter. “Hire an experienced consultant.”

Here are the main mistakes he outlined:

1. Harboring the wrong appreciation of a datacenter

One typical mistake is that would be that IT professionals and decision makers don’t differentiate between datacenters. Instead, they treat a datacenter as an all-inclusive black box where “many” servers are to be housed. That mindset is typically exposed when confronted with the simple question: “What do you intend to use your datacenter for?”

Ask yourself about the scale and anticipated usage of the datacenter, expansion plans of at least two to three years down the road, whether blade servers or standard rack mount servers will be utilized, etc. When you answer these questions, you can then extrapolate power consumption, as well as current and future capacities in terms of cabling, cooling and power.

2. Attempting to run a datacenter from improper facilities

It would be a mistake to simply acquire an ad-hoc facility and have it rebadged as a datacenter without a proper appraisal of its suitability, cautions Guerou. He cites an example in which a client, after having signed the lease for a fairly large space, sought out Mr. Guerou’s advice on how to proceed. To the client’s horror, the answer is that the venue was simply not suitable for a datacenter due to granite floorings and thick beams across the ceiling - resulting in an effective height that is simply inadequate for cabling and cooling purposes.

While it might not be possible for most organizations to put up custom-built datacenters on whim, what this client should have done was get an experienced consultant in and involved right from the get-go.

Ideally, in Mr. Guerou’s own words: “A datacenter should be a technical building dedicated to a very particular business of processing data.”

3. Buying by brands

Another common mistake is that many IT professionals attempt to buy into selected brands. While this strategy might work well when it comes to standardizing on servers or networking gear, an efficient and well-run datacenter has nothing to do with specific hardware brands or models. Rather, you should approach the datacenter from the perspective of a complete solution, where the entire design has to be considered as an integrated whole.

As an unfortunate side-effect of strong marketing by enterprise vendors, many users have, consciously or subconsciously, bought into the idea of designing a datacenter by snapping together disparate pieces of hardware. While not wrong, it’s imperative that the end-result be evaluated as a whole - and not in a piecemeal fashion.

Various hardware, such as types of servers, positioning of racks, networking equipment and redundant power supplies should dovetail properly with infrastructure such as cooling, ventilation, wiring, fire-suppression systems, and security measures.

4. Rushing onto the “Green IT” bandwagon

The increasingly popularity of “Green IT” has vendors unveiling new servers and equipment touted for their superior power efficiency. While the idea is definitely laudable, you should sieve out the marketing hype from actual operational consumption.

For example, two UPS from “vendor X,” while individually more power efficient at 90% loading, might actually offer a much poorer showing if deployed in a redundant configuration, where they will end up running at 45% loading. In the absence of proper scrutiny, green IT initiatives could degenerate into a numbers game.

At the end of the day, you should take overall power efficiency - or power factor, of the entire datacenter -a benchmark rather than weigh it by individual vendor claims. After all, a yardstick of a well-run datacenter has always been about power efficiency.

In parting, Mr. Guerou has the following advice for organizations thinking of building their own datacenter. “Hire an experienced consultant.”

iSCSI is the future of storage

This week, HP announced their $360 million acquisition of LeftHand networks. Last year, Dell surprised the tech industry with a $1.4 billion purchase of the formerly independent EqualLogic. With these iSCSI snap-ups by true tech titans, iSCSI has officially arrived, is here to stay, and, I believe, will become the technology of choice for most organizations in the future.

This is not to say that iSCSI has been sitting in the background up to this point. On the contrary, the technology has taken the industry by storm. Both of these companies based their entire business hopes on the possibility that organizations would see the intrinsic value to be found in iSCSI’s simplistic installation and management. To say that both companies have been successful would be an understatement.

I’m a big fan of both EqualLogic and LeftHand Networks offerings, having purchased an EqualLogic unit in a former life. At that time, I narrowed my selection down to two options - LeftHand and EqualLogic. Both solutions had their pros and cons, but both were more than viable.

It’s not all about EqualLogic and LeftHand, though. The big guns in storage have finally jumped feet first into the iSCSI fray with extremely compelling products of their own. Previously, these players, including EMC and NetApp, simply bolted iSCSI onto existing products. Lately, even the biggest Fibre Channel vendors are releasing native iSCSI arrays aimed at the mid-tier of the market. EMC’s AX4, for example, is available in both native iSCSI and native Fibre Channel versions and is priced in such a way that any organization considering EqualLogic or LeftHand should make sure to give the EMC AX4 a look. To be fair, the iSCSI-only AX4:

Does not support SAN copy for SAN to SAN replication

Is not as easy to install or manage as one of the aforementioned devices, but isn’t bad either

The bandwidth to the array does not increase as additional space is added

It does not include thin provisioning, although this was rumored to be rectified in a future software release

The AX4 supports up to 64 attached hosts

But, the price per TB is simply incredible and a solution based on a different vendor would not have been attainable. This year, I purchased just shy of 14 TB of raw space on a pair of AX4 arrays-4.8 TB SAS and 9 TB SATA-for under $40K. For the foreseeable future, I don’t need SAN copy and space can be managed in ways other than through thin provisioning. Over time, we’ll run about two dozen virtual machines on the AX4 along with our administrative databases and Exchange 2007 databases. By the time I need additional features, the AX4 will be due for replacement anyway.

iSCSI started out at the low end of the market, helping smaller organizations begin to move toward shared storage and away from direct attached solutions. As time goes on, iSCSI is moving up the food chain and, in many cases, is supplanting small and mid-sized Fibre Channel arrays, particularly in organizations that have never had a SAN before. As iSCSI continues to take advantage of high-speed SAS disks and begins to use 10Gb Ethernet for a transport mechanism, I see iSCSI continuing to move higher into the market. Of course, faster, more reliable disks and faster networking capabilities will begin to close the savings gap between iSCSI and Fibre Channel, but iSCSI’s reliance on Ethernet for an underlying transport mechanism brings major simplicity to the storage equation and I doubt that iSCSI’s costs will ever surpass Fibre Channel anyway, mainly due to the expensive networking hardware needed for significant Fibre Channel implementations.

Even though iSCSI will continue to make inroads further into many organizations, I don’t think that iSCSI will ever completely push Fibre Channel out of the way. Many organizations rely on the raw performance afforded by Fibre Channel and the folks behind Fibre Channel’s specifications aren’t sitting still. Every year brings advances to Fibre Channel, including faster disks and improved connection speeds.

In short, I see the iSCSI market continuing to grow very rapidly and, over time, supplanting what would have been Fibre Channel installations. Further, as organizations continue to expand their storage infrastructures, iSCSI will be a very strong contender, particularly as the solution is updated to take advantage of improvements to the networking speed and disk performance.

This is not to say that iSCSI has been sitting in the background up to this point. On the contrary, the technology has taken the industry by storm. Both of these companies based their entire business hopes on the possibility that organizations would see the intrinsic value to be found in iSCSI’s simplistic installation and management. To say that both companies have been successful would be an understatement.

I’m a big fan of both EqualLogic and LeftHand Networks offerings, having purchased an EqualLogic unit in a former life. At that time, I narrowed my selection down to two options - LeftHand and EqualLogic. Both solutions had their pros and cons, but both were more than viable.

It’s not all about EqualLogic and LeftHand, though. The big guns in storage have finally jumped feet first into the iSCSI fray with extremely compelling products of their own. Previously, these players, including EMC and NetApp, simply bolted iSCSI onto existing products. Lately, even the biggest Fibre Channel vendors are releasing native iSCSI arrays aimed at the mid-tier of the market. EMC’s AX4, for example, is available in both native iSCSI and native Fibre Channel versions and is priced in such a way that any organization considering EqualLogic or LeftHand should make sure to give the EMC AX4 a look. To be fair, the iSCSI-only AX4:

Does not support SAN copy for SAN to SAN replication

Is not as easy to install or manage as one of the aforementioned devices, but isn’t bad either

The bandwidth to the array does not increase as additional space is added

It does not include thin provisioning, although this was rumored to be rectified in a future software release

The AX4 supports up to 64 attached hosts

But, the price per TB is simply incredible and a solution based on a different vendor would not have been attainable. This year, I purchased just shy of 14 TB of raw space on a pair of AX4 arrays-4.8 TB SAS and 9 TB SATA-for under $40K. For the foreseeable future, I don’t need SAN copy and space can be managed in ways other than through thin provisioning. Over time, we’ll run about two dozen virtual machines on the AX4 along with our administrative databases and Exchange 2007 databases. By the time I need additional features, the AX4 will be due for replacement anyway.

iSCSI started out at the low end of the market, helping smaller organizations begin to move toward shared storage and away from direct attached solutions. As time goes on, iSCSI is moving up the food chain and, in many cases, is supplanting small and mid-sized Fibre Channel arrays, particularly in organizations that have never had a SAN before. As iSCSI continues to take advantage of high-speed SAS disks and begins to use 10Gb Ethernet for a transport mechanism, I see iSCSI continuing to move higher into the market. Of course, faster, more reliable disks and faster networking capabilities will begin to close the savings gap between iSCSI and Fibre Channel, but iSCSI’s reliance on Ethernet for an underlying transport mechanism brings major simplicity to the storage equation and I doubt that iSCSI’s costs will ever surpass Fibre Channel anyway, mainly due to the expensive networking hardware needed for significant Fibre Channel implementations.

Even though iSCSI will continue to make inroads further into many organizations, I don’t think that iSCSI will ever completely push Fibre Channel out of the way. Many organizations rely on the raw performance afforded by Fibre Channel and the folks behind Fibre Channel’s specifications aren’t sitting still. Every year brings advances to Fibre Channel, including faster disks and improved connection speeds.

In short, I see the iSCSI market continuing to grow very rapidly and, over time, supplanting what would have been Fibre Channel installations. Further, as organizations continue to expand their storage infrastructures, iSCSI will be a very strong contender, particularly as the solution is updated to take advantage of improvements to the networking speed and disk performance.

Introduction to Policy-Based Management in SQL Server 2008

Policy-Based Management in SQL Server 2008 allows the database administrator to define policies that tie to database instances and objects. These policies allow the Database Administrator (DBA) to specify rules for which objects and their properties are created, or modified. An example of this would be to create a database-level policy that disallows the AutoShrink property to be enabled for a database. Another example would be a policy that ensures the name of all table triggers created on a database table begins with tr_.

As with any new SQL Server technology (or Microsoft technology in general), there is a new object naming nomenclature associated with Policy-Based Management. Below is a listing of some of the new base objects.

PolicyA Policy is a set of conditions specified on the facets of a target. In other words, a Policy is basically a set of rules specified for properties of database or server objects.

TargetA Target is an object that is managed by Policy-Based Management. Includes objects such as the database instance, a database, table, stored procedure, trigger, or index.

FacetA Facet is a property of an object (target) that can be involved in Policy Based Management. An example of a Facet is the name of a Trigger or the AutoShrink property of a database.

ConditionA Condition is the criteria that can be specify for a Target’s Facets. For example, you can set a condition for a Fact that specifies that all stored procedure names in the Schema ‘Banking’ begin with the name ‘bnk_’.

You can also assign a policy to a category. This allows you manage a set of policies assigned to the same category. A policy belongs to only one category.

Policy Evaluation Modes

A Policy can be evaluated in a number of different ways:

On demand - The policy is evaluated only when directly ran by the administrator.

On change: prevent - DDL triggers are used to prevent policy violations.

On change: log only - Event notifications are used to check a policy when a change is made.

On schedule - A SQL Agent job is used to periodically check policies for violations.

Advantages of Policy Based ManagementPolicy-Based Management gives you much more control over your database procedures as a DBA. You as a DBA have the ability to enforce your paper policies at the database level. Paper polices are great for defining database standards are guidelines. However, it takes time and effort to enforce these. To strictly enforce them, you have to go over your database with a fine-toothed comb. With Policy-Based Management, you can define your policies and rest assured that they will be enforced.

Next TimeToday I took a look at the basic ideas behind Policy-Based Management in SQL Server 2008. In my next article I’ll take a look at how you can make these ideas a reality by showing you how you can create your own polices to use to administer your SQL Server.

As with any new SQL Server technology (or Microsoft technology in general), there is a new object naming nomenclature associated with Policy-Based Management. Below is a listing of some of the new base objects.

PolicyA Policy is a set of conditions specified on the facets of a target. In other words, a Policy is basically a set of rules specified for properties of database or server objects.

TargetA Target is an object that is managed by Policy-Based Management. Includes objects such as the database instance, a database, table, stored procedure, trigger, or index.

FacetA Facet is a property of an object (target) that can be involved in Policy Based Management. An example of a Facet is the name of a Trigger or the AutoShrink property of a database.

ConditionA Condition is the criteria that can be specify for a Target’s Facets. For example, you can set a condition for a Fact that specifies that all stored procedure names in the Schema ‘Banking’ begin with the name ‘bnk_’.

You can also assign a policy to a category. This allows you manage a set of policies assigned to the same category. A policy belongs to only one category.

Policy Evaluation Modes

A Policy can be evaluated in a number of different ways:

On demand - The policy is evaluated only when directly ran by the administrator.

On change: prevent - DDL triggers are used to prevent policy violations.

On change: log only - Event notifications are used to check a policy when a change is made.

On schedule - A SQL Agent job is used to periodically check policies for violations.

Advantages of Policy Based ManagementPolicy-Based Management gives you much more control over your database procedures as a DBA. You as a DBA have the ability to enforce your paper policies at the database level. Paper polices are great for defining database standards are guidelines. However, it takes time and effort to enforce these. To strictly enforce them, you have to go over your database with a fine-toothed comb. With Policy-Based Management, you can define your policies and rest assured that they will be enforced.

Next TimeToday I took a look at the basic ideas behind Policy-Based Management in SQL Server 2008. In my next article I’ll take a look at how you can make these ideas a reality by showing you how you can create your own polices to use to administer your SQL Server.

Defining SQL Server 2008 Policies

A new SQL Server 2008 feature that allows the Database Administrator the ability to define and enforce policies through the database engine. In today’s article I’ll look at how you can use SQL Server Management Studio to define your own policies.

Define your PoliciesThe most challenging part of creating an effective database policy system is deciding what exactly it is your want to create policies for. SQL Server 2008 provides a large range of Facets (objects) for which conditions and policies can be defined for, so it will absolutely be worth the effort to take some time to map out what Policies you want to enforce.

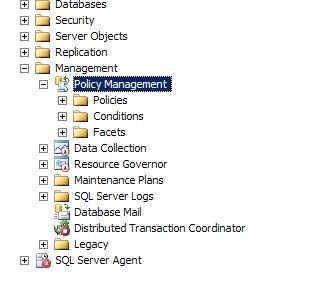

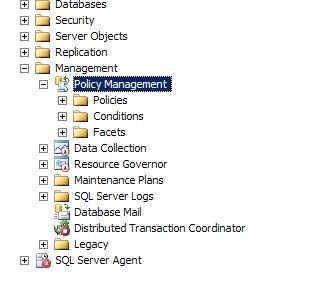

To define a new Policy, open SQL Server Management Studio and navigate to the Management node in Object Explorer. Before I can define a Policy, I’ll first need to define a new Condition and can easily do so by right-clicking on the Conditions folder under the Policy Management folder.

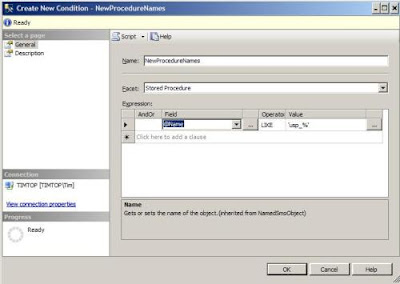

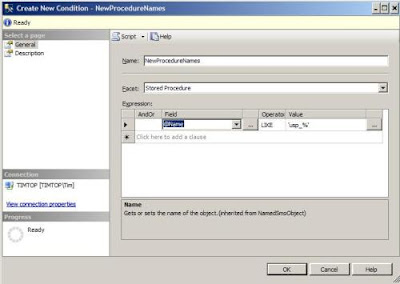

A Condition is a set of criteria defined on a Facet. A Facet is really nothing more than a SQL Server object that you can involve in a Policy. In the Create New Condition screen, I define a new Condition named NewStoredProcedureNames. I can define the criteria for my new Condition in the Expressions section. Each Facet (Stored Procedure in this case) has a set of Fields for which condition expressions can be defined. For this particular Condition, I want to set criteria so that any new Stored Procedure name begins with usp_, and this is fairly straightforward to do through the editor.

Now that I have my Condition defined, I can create a new Policy.

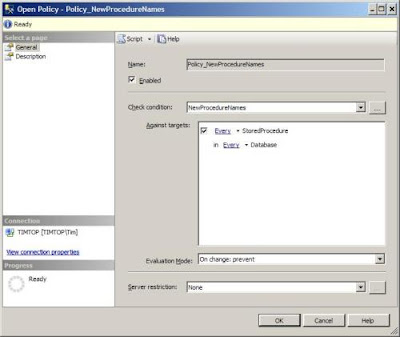

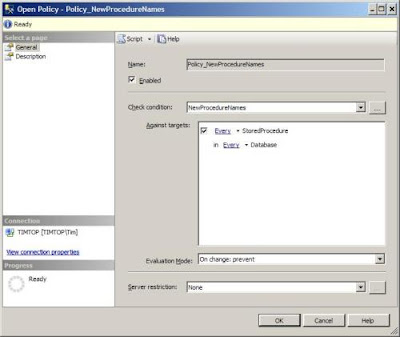

Right click the Policy folder and select New Policy. In the Open Policy window, choose the NewProcedureNames check condition we just created. Choose the On change: prevent Evaluation Mode. This mode will evaluate the Policy when a new stored procedure is created, and if the procedure does not start with usp_, an error will be thrown and the new procedure will be disallowed. Be sure to click the Enabled box to enable the Policy.

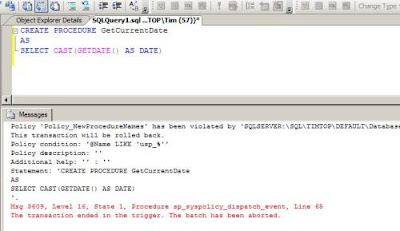

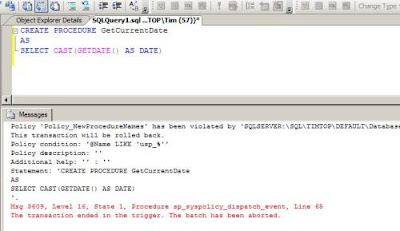

To test my new Policy, I write a script to create a new stored procedure named GetCurrentDate that returns the current date. When I attempt to execute the script, I receive an error message letting me know that I have violated a Policy. For a friendlier message, you can define informative descriptions with your Policies so that the user is given more instruction as to what condition was violated.

Here is the text of the procedure I attempted to create above.

CREATE PROCEDURE GetCurrentDateASSELECT CAST(GETDATE() AS DATE)ConclusionToday I defined a simple Policy to prevent the creation of any new stored procedure that does not begin with usp_. The great thing about Policy-Based management is how complex you can define your Policies to adhere to your defined database policies. The more you play around with defining policies, the more creative and effective you’ll become at defining your own polices, so take advantage as soon as you can!

Define your PoliciesThe most challenging part of creating an effective database policy system is deciding what exactly it is your want to create policies for. SQL Server 2008 provides a large range of Facets (objects) for which conditions and policies can be defined for, so it will absolutely be worth the effort to take some time to map out what Policies you want to enforce.

To define a new Policy, open SQL Server Management Studio and navigate to the Management node in Object Explorer. Before I can define a Policy, I’ll first need to define a new Condition and can easily do so by right-clicking on the Conditions folder under the Policy Management folder.

A Condition is a set of criteria defined on a Facet. A Facet is really nothing more than a SQL Server object that you can involve in a Policy. In the Create New Condition screen, I define a new Condition named NewStoredProcedureNames. I can define the criteria for my new Condition in the Expressions section. Each Facet (Stored Procedure in this case) has a set of Fields for which condition expressions can be defined. For this particular Condition, I want to set criteria so that any new Stored Procedure name begins with usp_, and this is fairly straightforward to do through the editor.

Now that I have my Condition defined, I can create a new Policy.

Right click the Policy folder and select New Policy. In the Open Policy window, choose the NewProcedureNames check condition we just created. Choose the On change: prevent Evaluation Mode. This mode will evaluate the Policy when a new stored procedure is created, and if the procedure does not start with usp_, an error will be thrown and the new procedure will be disallowed. Be sure to click the Enabled box to enable the Policy.

To test my new Policy, I write a script to create a new stored procedure named GetCurrentDate that returns the current date. When I attempt to execute the script, I receive an error message letting me know that I have violated a Policy. For a friendlier message, you can define informative descriptions with your Policies so that the user is given more instruction as to what condition was violated.

Here is the text of the procedure I attempted to create above.

CREATE PROCEDURE GetCurrentDateASSELECT CAST(GETDATE() AS DATE)ConclusionToday I defined a simple Policy to prevent the creation of any new stored procedure that does not begin with usp_. The great thing about Policy-Based management is how complex you can define your Policies to adhere to your defined database policies. The more you play around with defining policies, the more creative and effective you’ll become at defining your own polices, so take advantage as soon as you can!

See what process is using a TCP port in Windows Server 2008

You may find yourself frequently going to network tools to determine traffic patterns from one server to another; Windows Server 2008 (and earlier versions of Windows Server) can allow you to get that information locally on its connections. You can combine the netstat and tasklist commands to determine what process is using a port on the Windows Server.

The following command will show what network traffic is in use at the port level:

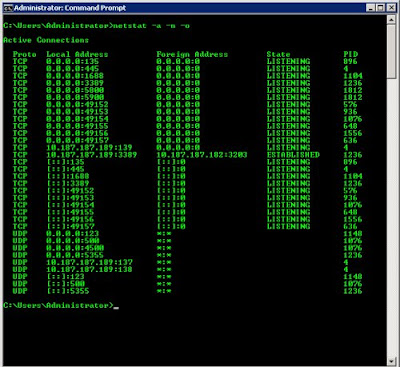

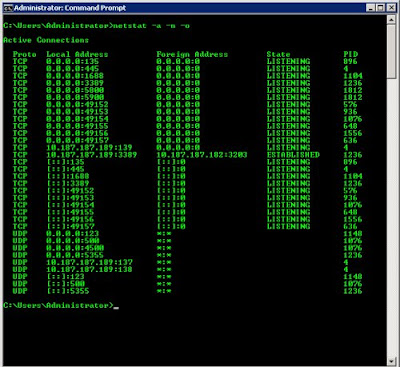

Netstat -a -n -oThe -o parameter will display the associated process identifier (PID) using the port. This command will produce an output similar to what is in Figure A.

Figure A

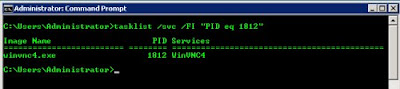

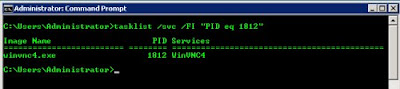

With the PIDs listed in the netstat output, you can follow up with the Windows Task Manager (taskmgr.exe) or run a script with a specific PID that is using a port from the previous step. You can then use the tasklist command with the specific PID that corresponds to a port in question. From the previous example, ports 5800 and 5900 are used by PID 1812, so using the tasklist command will show you the process using the ports. Figure B shows this query.

Figure B

This identifies VNC as the culprit to using the port. While a quick Google search on ports could possibly obtain the same result, this procedure can be extremely helpful when you’re trying to identify a viral process that may be running on the Windows Server.

The following command will show what network traffic is in use at the port level:

Netstat -a -n -oThe -o parameter will display the associated process identifier (PID) using the port. This command will produce an output similar to what is in Figure A.

Figure A

With the PIDs listed in the netstat output, you can follow up with the Windows Task Manager (taskmgr.exe) or run a script with a specific PID that is using a port from the previous step. You can then use the tasklist command with the specific PID that corresponds to a port in question. From the previous example, ports 5800 and 5900 are used by PID 1812, so using the tasklist command will show you the process using the ports. Figure B shows this query.

Figure B

This identifies VNC as the culprit to using the port. While a quick Google search on ports could possibly obtain the same result, this procedure can be extremely helpful when you’re trying to identify a viral process that may be running on the Windows Server.

Consider running the browser service on Windows Server 2008 DCs

Many Windows administrators, myself included, are trying to stop using NetBIOS and switch to DNS exclusively for name resolution. But under certain situations, a Windows Server 2008 domain controller may not display networks correctly when browsing the network.

For Windows Server 2008 installations, the computer browser is disabled by default, and dcpromo does not change the configuration of the service when Active Directory is installed. The network browsing is convenient for drive mappings and quick access to systems, and this browsing depends on the short name features of NetBIOS.

One way to correct these computer display issues is to configure the computer browser service to be an automatic starting service. There are a number of ways to do this, including the sc command. Figure A shows the sc command used to configure the service to be automatic and then immediately start the computer browser service.

Figure A

If you have this configuration for domain controllers running, the flexible single master operation (FSMO) role can prevent the browse-ready computers from being removed from display. However, this service has been set with a default state of Disable and should only be changed if your browse-ready list of computers is shrinking or is only a local subnet.

NetBIOS resolution is handy except for very large Active Directory networks. Larger networks are better use the Windows Server 2008 GlobalNames zone.

For Windows Server 2008 installations, the computer browser is disabled by default, and dcpromo does not change the configuration of the service when Active Directory is installed. The network browsing is convenient for drive mappings and quick access to systems, and this browsing depends on the short name features of NetBIOS.

One way to correct these computer display issues is to configure the computer browser service to be an automatic starting service. There are a number of ways to do this, including the sc command. Figure A shows the sc command used to configure the service to be automatic and then immediately start the computer browser service.

Figure A

If you have this configuration for domain controllers running, the flexible single master operation (FSMO) role can prevent the browse-ready computers from being removed from display. However, this service has been set with a default state of Disable and should only be changed if your browse-ready list of computers is shrinking or is only a local subnet.

NetBIOS resolution is handy except for very large Active Directory networks. Larger networks are better use the Windows Server 2008 GlobalNames zone.

Tuesday, October 7, 2008

10 things you should know about launching an IT consultancy

Oh yeah. You’re going to work for yourself, be your own boss. Come and go when you want. No more kowtowing to The Man, right?

Running your own computer consulting business is rewarding, but it’s also full of numerous and competing challenges. Before you make the jump into entrepreneurship, take a moment to benefit from a few hundred hours of research I’ve invested and the real-world lessons I’ve learned in launching my own computer consulting franchise.

There are plenty of launch-your-own-business books out there. I know. I read several of them. Most are great resources. Many provide critical lessons in best managing liquid assets, understanding opportunity costs, and leveraging existing business relationships. But when it comes down to the dirty details, here are 10 things you really, really need to know (in street language) before quitting your day job.

#1: You need to incorporateYou don’t want to lose your house if a client’s data is lost. If you try hanging out a shingle as an independent lone ranger, your personal assets could be at risk. (Note that I’m not dispensing legal nor accounting advice. Consult your attorney for legal matters and a qualified accountant regarding tax issues.)

Ultimately, life is easier when your business operates as a business and not as a side project you maintain when you feel like it. Clients appreciate the assurance of working with a dedicated business. I can’t tell you how many clients I’ve obtained whose last IT guy “did it on the side” and has now taken a corporate job and doesn’t have time to help the client whose business has come to a standstill because of computer problems. Clients want to know you’re serious about providing service and that they’re not entering a new relationship in which they’re just going to get burned again in a few months time.

#2: You need to register for a federal tax ID numberNext, you need to register for a federal tax ID number. Hardly anyone (vendors, banks, and even some clients) will talk to you if you don’t.

Wait a second. Didn’t you just complete a mountain of paperwork to form your business (either as a corporation or LLC)? Yes, you did. But attorneys and online services charge incredible rates to obtain a federal tax ID for you.

Here’s a secret: It’s easy. Just go to the IRS Web site, complete and submit form SS-4 online, and voila. You’ll be the proud new owner of a federal tax ID.

#3: You need to register for a state sales tax exemptionYou need a state sales tax exemption, too (most likely). If you’re in a state that collects sales tax, you’re responsible for ensuring sales tax gets paid on any item you sell a client. In such states, whether you buy a PC for a customer or purchase antivirus licenses, taxes need to be paid.

Check your state’s Web site. Look for information on the state’s department of revenue. You’ll probably have to complete a form, possibly even have it notarized, and return it to the state’s revenue cabinet. Within a few weeks, you’ll receive an account number. You’ll use that account number when you purchase products from vendors. You can opt NOT to pay sales tax when you purchase the item, instead choosing to pay the sales tax when you sell the item to the client.

Why do it this way? Because many (most) consultants charge clients far more for a purchase than the consultant paid. Some call it markup; accountants prefer to view it as profit. But you certainly don’t want to have to try to determine what taxes still need to be paid if some tax was paid earlier. Thus, charge tax at the point of sale to the customer, not when you purchase the item.

#4: You need to register with local authoritiesLocal government wants its money, too. Depending on where your business is located and services customers, you’ll likely need to register for a business license. As with the state sales tax exemption, contact your local government’s revenue cabinet or revenue commission for more information on registering your business. Expect to pay a fee for the privilege.

#5: QuickBooks is your friendOnce your paperwork’s complete, it’s time for more paperwork. In fact, you’d better learn to love paperwork, as a business owner. There’s lots of it, whether it’s preparing quarterly tax filings, generating monthly invoicing, writing collection letters, or simply returning monthly sales reports to state and local revenue cabinets.

QuickBooks can simplify the process. From helping keep your service rates consistent (you’ll likely want one level for benchwork, another for residential or home office service, and yet a third for commercial accounts) to professionally invoicing customers, QuickBooks can manage much of your finances.

I recommend purchasing the latest Pro version, along with the corresponding Missing Manual book for the version you’ve bought. Plan on spending a couple of weekends, BEFORE you’ve launched your business, doing nothing but studying the financial software. Better yet, obtain assistance from an accountant or certified QuickBooks professional to set up your initial Chart of Accounts. A little extra time taken on the front end to ensure the software’s configured properly for your business will save you tons of time on the backend. I promise.

#6: Backend systems will make or break youSpeaking of backend, backend systems are a pain in the you-know-what. And by backend, I mean all your back office chores, from marketing services to billing to vendor management and fulfillment. Add call management to the list, too.

Just as when you’re stuck in traffic driving between service calls, you don’t make any money when you’re up to your elbows in paper or processing tasks. It’s frustrating. Clients want you to order a new server box, two desktops, and a new laptop. They don’t want to pay a markup, either. But they’re happy to pay you for your time to install the new equipment.

Sound good? It’s not.

Consider the facts. You have to form a relationship with the vendor. It will need your bank account information, maybe proof of insurance (expect to carry one million dollars of general liability), your state sales tax exemption ID, your federal employer ID, a list of references, and a host of other information that takes a day to collect. Granted, you have to do that only once (with each vendor, and you’ll need about 10), but then you still have to wade through their catalogs, select the models you need, and configure them with the appropriate tape arrays, software packages, etc. That takes an hour alone. And again, you’re typically not getting paid for this research. Even if you mark hardware sales up 15 percent, don’t plan on any Hawaiian vacation as a result.

Add in similar trials and tribulations with your marketing efforts, billing systems, vendor maintenance, channel resellers, management issues, etc., and you can see why many consultants keep a full-time office manager on staff. It’s no great revelation of my business strategy to say that’s why I went with a franchise group. I have a world of backend support ready and waiting when I need it. I can’t imagine negotiating favorable or competitive pricing with computer manufacturers, antivirus vendors, or Microsoft if I operated on my own.

Before you open your doors, make sure that you know how you’ll tackle these wide-ranging back office chores. You’ll be challenged with completing them on an almost daily basis.

#7: Vendor relationships will determine your successThis is one of those business facets I didn’t fully appreciate until I was operating on my own. Everyone wants you to sell their stuff, right? How hard can it be for the two of you to hook up?

Well, it’s hard, as it turns out, to obtain products configured exactly as your client needs quickly and at a competitive price if you don’t have strong vendor relationships. That means you’ll need to spend time at trade shows and on the telephone developing business relationships with everyone from software manufacturers and hardware distributors to local computer store owners who keep life-saving SATA disks and patch 5 cables in stock when you can’t wait five days for them to show up via UPS.

Different vendors have their own processes, so be prepared to learn myriad ways of signing up and jumping through hoops. Some have online registrations; others prefer faxes and notarized affidavits. Either way, they all take time to launch, so plan on beginning vendor discussions, and establishing your channel relationships, months in advance of opening your consultancy.

#8: You must know what you do (and explain it in 10 seconds or less)All the start-your-own-business books emphasize writing your 50-page business plan. Yes, I did that. And do you know how many times I’ve referred to it since I opened my business? Right; not once.

The written business plan is essential. Don’t get me wrong. It’s important because it gets you thinking about all those topics (target markets, capitalization, sales and marketing, cash flow requirements, etc.) you must master to be successful.

But here’s what you really need to include in your business plan: a succinct and articulate explanation of what your business does, how the services you provide help other businesses succeed, and how you’re different. Oh, and you need to be able to explain all that in 10 seconds or less.

Really. I’m not kidding.

Business Network International (plan on joining the chapter in your area) is on to something when it allots members just 30 seconds or so to explain what they do and the nature of their competitive advantage. Many times I’ve been approached in elevators, at stoplights (with the windows down), and just entering my car in a parking lot by prospective customers. Sometimes they have a quick question, other times they need IT help right now. Here’s the best part; they don’t always know it.

The ability to quickly communicate the value of the services you provide is paramount to success. Ensure that you can rattle off a sincere description of what you do and how you do it in 10 seconds and without having to think about it. It must be a natural reaction you develop to specific stimuli. You’ll cash more checks if you do.

#9: It’s all about the brandingWhy have I been approached by customers at stoplights, in parking lots, and in elevators? I believe in branding. And unlike many pop business books that broach the subject of branding but don’t leave you with any specifics, here’s what I mean by that.

People know what I do. Give me 10 seconds and I can fill in any knowledge gaps quickly. My “brand” does much of the ice breaking for me. I travel virtually nowhere without it. My company’s logo and telephone number are on shirts. Long sleeve, short sleeve, polos, and dress shirts; they all feature my logo. Both my cars are emblazoned with logos, telephone numbers, and simple marketing messages (which I keep consistent with my Yellow Pages and other advertising).

I have baseball hats for casual trips to Home Depot. My attaché features my company logo. My wife wears shirts displaying the company logo when grocery shopping. After I visit clients, even their PC bears a shiny silver sticker with my logo and telephone number.

Does it work? You better believe it. Hang out a shingle and a few people will call. Plaster a consistent but tasteful logo and simple message on your cars, clothing, ads, Web site, etc., and the calls begin stacking up.

Do you have to live, eat, and breathe the brand? No. But it helps. And let’s face it. After polishing off a burrito and a beer, I don’t mind someone asking if they can give me their laptop to repair when I approach my car in a parking lot. Just in case they have questions, I keep brochures, business cards and notepads (again, all featuring my logo and telephone number) in my glove box. You’d be surprised how quickly I go through them. I am.

#10: A niche is essentialThe business plan books touch on this, but they rarely focus on technology consultants directly. You need to know your market niche. I’m talking about your target market here.

Will you service only small businesses? If so, you better familiarize yourself with the software they use. Or are you targeting physicians? In that case, you better know all things HIPAA, Intergy, and Medisoft (among others).

Know up front that you’re not going to be able to master everything. I choose to manage most Windows server, desktop, and network issues. When I encounter issues with specific medical software, dental systems, or client relationship software platforms, I call in an expert trained on those platforms. We work alongside to iron out the issue together.

Over time, that strategy provides me with greater penetration into more markets than if I concentrated solely on mastering medical systems, for example. Plus, clients respect you when you tell them you’re outside your area of expertise. It builds trust, believe it or not.

Whatever you choose to focus on, ensure that you know your niche. Do all you can to research your target market thoroughly and understand the challenges such clients battle daily. Otherwise, you’ll go crazy trying to develop expertise with Medisoft databases at the same time Intel’s rolling out new dual-core chips and Microsoft’s releasing a drastically new version of Office.

Running your own computer consulting business is rewarding, but it’s also full of numerous and competing challenges. Before you make the jump into entrepreneurship, take a moment to benefit from a few hundred hours of research I’ve invested and the real-world lessons I’ve learned in launching my own computer consulting franchise.

There are plenty of launch-your-own-business books out there. I know. I read several of them. Most are great resources. Many provide critical lessons in best managing liquid assets, understanding opportunity costs, and leveraging existing business relationships. But when it comes down to the dirty details, here are 10 things you really, really need to know (in street language) before quitting your day job.

#1: You need to incorporateYou don’t want to lose your house if a client’s data is lost. If you try hanging out a shingle as an independent lone ranger, your personal assets could be at risk. (Note that I’m not dispensing legal nor accounting advice. Consult your attorney for legal matters and a qualified accountant regarding tax issues.)

Ultimately, life is easier when your business operates as a business and not as a side project you maintain when you feel like it. Clients appreciate the assurance of working with a dedicated business. I can’t tell you how many clients I’ve obtained whose last IT guy “did it on the side” and has now taken a corporate job and doesn’t have time to help the client whose business has come to a standstill because of computer problems. Clients want to know you’re serious about providing service and that they’re not entering a new relationship in which they’re just going to get burned again in a few months time.

#2: You need to register for a federal tax ID numberNext, you need to register for a federal tax ID number. Hardly anyone (vendors, banks, and even some clients) will talk to you if you don’t.

Wait a second. Didn’t you just complete a mountain of paperwork to form your business (either as a corporation or LLC)? Yes, you did. But attorneys and online services charge incredible rates to obtain a federal tax ID for you.

Here’s a secret: It’s easy. Just go to the IRS Web site, complete and submit form SS-4 online, and voila. You’ll be the proud new owner of a federal tax ID.

#3: You need to register for a state sales tax exemptionYou need a state sales tax exemption, too (most likely). If you’re in a state that collects sales tax, you’re responsible for ensuring sales tax gets paid on any item you sell a client. In such states, whether you buy a PC for a customer or purchase antivirus licenses, taxes need to be paid.

Check your state’s Web site. Look for information on the state’s department of revenue. You’ll probably have to complete a form, possibly even have it notarized, and return it to the state’s revenue cabinet. Within a few weeks, you’ll receive an account number. You’ll use that account number when you purchase products from vendors. You can opt NOT to pay sales tax when you purchase the item, instead choosing to pay the sales tax when you sell the item to the client.

Why do it this way? Because many (most) consultants charge clients far more for a purchase than the consultant paid. Some call it markup; accountants prefer to view it as profit. But you certainly don’t want to have to try to determine what taxes still need to be paid if some tax was paid earlier. Thus, charge tax at the point of sale to the customer, not when you purchase the item.

#4: You need to register with local authoritiesLocal government wants its money, too. Depending on where your business is located and services customers, you’ll likely need to register for a business license. As with the state sales tax exemption, contact your local government’s revenue cabinet or revenue commission for more information on registering your business. Expect to pay a fee for the privilege.

#5: QuickBooks is your friendOnce your paperwork’s complete, it’s time for more paperwork. In fact, you’d better learn to love paperwork, as a business owner. There’s lots of it, whether it’s preparing quarterly tax filings, generating monthly invoicing, writing collection letters, or simply returning monthly sales reports to state and local revenue cabinets.

QuickBooks can simplify the process. From helping keep your service rates consistent (you’ll likely want one level for benchwork, another for residential or home office service, and yet a third for commercial accounts) to professionally invoicing customers, QuickBooks can manage much of your finances.

I recommend purchasing the latest Pro version, along with the corresponding Missing Manual book for the version you’ve bought. Plan on spending a couple of weekends, BEFORE you’ve launched your business, doing nothing but studying the financial software. Better yet, obtain assistance from an accountant or certified QuickBooks professional to set up your initial Chart of Accounts. A little extra time taken on the front end to ensure the software’s configured properly for your business will save you tons of time on the backend. I promise.

#6: Backend systems will make or break youSpeaking of backend, backend systems are a pain in the you-know-what. And by backend, I mean all your back office chores, from marketing services to billing to vendor management and fulfillment. Add call management to the list, too.

Just as when you’re stuck in traffic driving between service calls, you don’t make any money when you’re up to your elbows in paper or processing tasks. It’s frustrating. Clients want you to order a new server box, two desktops, and a new laptop. They don’t want to pay a markup, either. But they’re happy to pay you for your time to install the new equipment.

Sound good? It’s not.

Consider the facts. You have to form a relationship with the vendor. It will need your bank account information, maybe proof of insurance (expect to carry one million dollars of general liability), your state sales tax exemption ID, your federal employer ID, a list of references, and a host of other information that takes a day to collect. Granted, you have to do that only once (with each vendor, and you’ll need about 10), but then you still have to wade through their catalogs, select the models you need, and configure them with the appropriate tape arrays, software packages, etc. That takes an hour alone. And again, you’re typically not getting paid for this research. Even if you mark hardware sales up 15 percent, don’t plan on any Hawaiian vacation as a result.